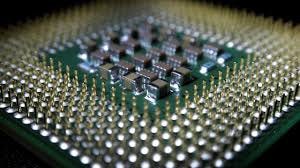

Computing power

A tour of CPU concepts like multicore, and Hyperthreading. Plus how HTTP client-side caching works.

Hi Friends,

Welcome to the 24th issue of the Polymathic Engineer newsletter.

Today we are hitting a significant milestone: we are now 2K+ people interested in algorithms and distributed systems.

Thanks to all the new subscribers and all those who decided to stay. I appreciate it, and I'll try to make this newsletter consistently better.

Today we will talk …